Having worked in test development for over two decades, I’ve seen educational testing dramatically evolve over the years. This evolution has occurred not only in the use of technology to assess students, but also in people’s understanding of what testing is all about. I am happy to say that this understanding of testing by administrators, teachers, principals, and even families is much deeper than it used to be.

However, the incorrect use of the word “diagnostic” by test developers continues to be a travesty that affects every classroom. As a test developer myself, I know that a diagnostic is a test that informs instruction and explains why a particular student is struggling or doing well in a subject. Yet test developers continue to use the word to describe reports generated by screeners or other summative assessments.

Screeners are shorter in length than diagnostics and provide quick results; they should not be confused with formative assessments. If I want to test students’ short-vowel knowledge, I can give them a brief quiz to discover whether they’ve mastered this skill. The scores will provide a quick yes-or-no answer as to whether the skill has been mastered, but they won’t give me the detail I need to remediate. Screeners for “reading” or “math” reflect a student’s entire ability in these broad areas without zeroing in on specific skills within those areas; all they can really say is whether a student is doing well or poorly. Their analysis or diagnostic ability is limited to almost nothing.

Learn more about Dyslexia Screening today!

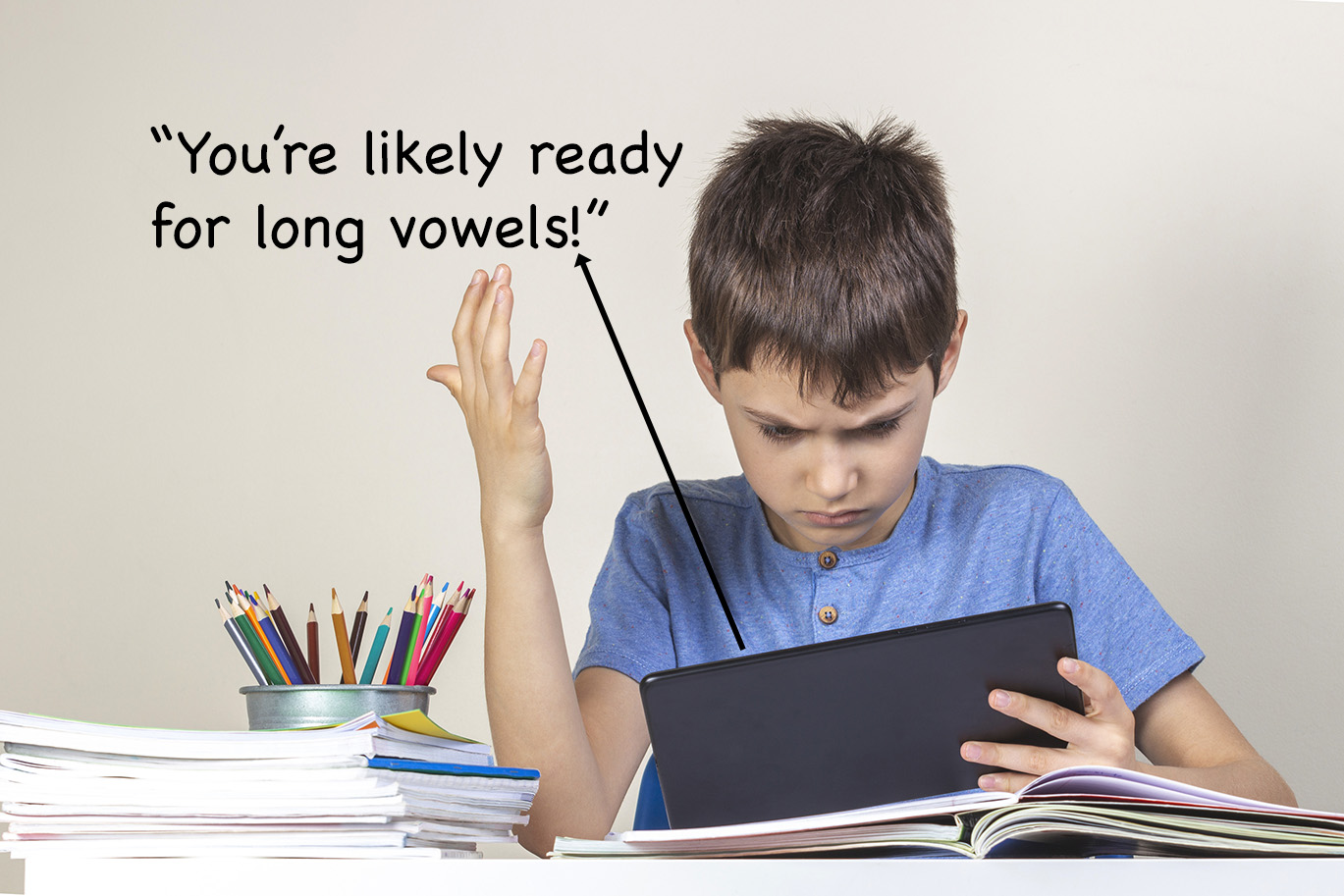

The big “gotcha” for screener and summative assessment companies is that they take their vague data and expand on them in long, verbose student reports. They may say that a student is “developmentally ready” to learn multiplication of two digits by one digit, or that a student is “likely ready” to learn long vowels. This technique breaks the cardinal rule of data analysis. You never take the data on the many and apply it to the individual.

This would be like me telling my doctor that I have a massive headache and the doctor not doing any further analysis but simply instructing me to take two ibuprofen based on the fact that for 60% of individuals, this would help. But what about the other 40%, or the 0.05% with a brain tumor, the 5% with an allergy-related headache, or the 5% with a sinus infection? See more examples in a blog post from veteran Fairfax County Public Schools teacher K.O. Wedekind’s blog article.

It’s not just screeners that break this cardinal rule. Lengthy assessments designed primarily for accountability can also fail to provide diagnostic data when their design and length are driven by the need for high accuracy and predictability on state accountability tests. NWEA’s MAP is a good example of this.

It is fine to use screeners and long summative assessments when appropriate, but it is unethical to depend on student reports that use vague, misleading language such as “developmentally ready” or “likely ready.” Educators are short of time and looking for ways to support student growth. Many administrators buy these assessments to help their teachers, but they have been sold a false bill of goods.

This brings us back to learning loss. In order to remedy learning loss and unfinished learning, student gaps must be identified to the specific instructional points in a scope and sequence of skills and concepts. Saying that a student’s gaps are in fractions, multiplication, phonics, and comprehension is not good enough. Where in fractions? Where in phonics? Why is comprehension low? Learning loss has to be identified at students’ zone of proximal development, which is the point at which they are ready to learn.

When your school or district is reviewing assessment tools that are marketed as diagnostic, look at the student report. If it walks like a duck and quacks like a duck, it’s a duck. If the data is vague, doesn’t tell you why or what to do next, or uses terms like “developmentally ready” or “likely ready,” it is not diagnostic. Ask yourself: is this measure good enough to inform present levels in an IEP (Individual Education Program in special education) or set weekly goals for a student with an IEP?

The real cost of using screeners and assessments that purport to be diagnostic but most assuredly are not is the damage they do. Teachers can’t use the data to support learning at the individual level, student learning gaps continue to grow, and the educational system ultimately fails to provide access and equity to every student.

Leave A Comment