Understanding Our AI Leadership

Harnessing AI to Empower Teachers.

AI Leadership in Special Education: Diagnostics, Compliance, and Context Engineering

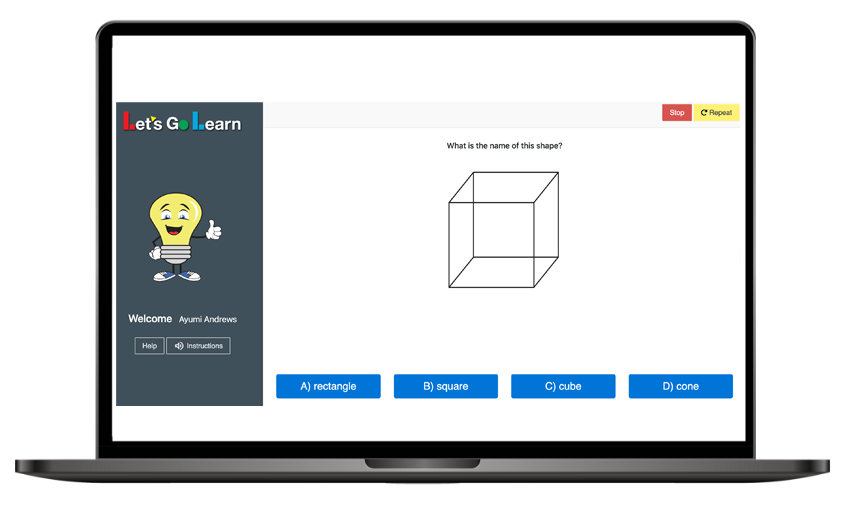

Let’s Go Learn transforms how IEPs are created by tackling the two hardest parts of special education: getting accurate present levels and keeping progress monitoring up to date. This alone is transformative for teachers, empowering them to accomplish one of the most time-consuming and difficult tasks in special education.

Meet Airma, Let’s Go Learn’s Learning Plan AI Assistant

From there, we optionally layer on AI when schools are ready. Unlike first-generation AI tools that only work with what teachers type or paste in, we start with precision diagnostic assessments in reading and math. This gives our AI validated, baseline data to build from — not guesswork. With every teacher quiz or follow-up assessment, new data overlays the baseline, instantly generating fresh scores and growth insights. That updated information is what feeds our AI, so goals and recommendations are always anchored in real, current performance.

We call this approach Context Engineering — embedding validated diagnostic context directly into AI so it can generate precise first-draft PLAAFP statements, SMART goals, and intervention supports. Teachers remain in control, while AI automates the hardest lift: translating evolving diagnostic data into usable, eloquently written documentation.

AI vs. Humans: Who Writes Better IEPs

What Sets Us Apart: Present Levels, Progress Monitoring, and Full IEP Workflow

- Present Levels Made Easy – We solve the hardest challenge for special education teachers: generating accurate, CASE-endorsed, validated present levels of performance through our diagnostic assessments.

- Ongoing Progress Monitoring – Every quiz or assignment overlays onto the baseline, updating scores in reading and math. AI always works from fresh, accurate data when drafting goals or lessons.

- Diagnostic-Driven Drafts – Our AI doesn’t guess from prompts — it pulls from precision assessments to generate evidence-based PLAAFPs and SMART goals.

- Context Engineering & Guardrails – Structured diagnostic data ensures AI outputs are reliable, transparent, and defensible, reducing hallucinations and inconsistencies.

- AI Firewall & Data Privacy – Student information is de-identified before reaching AI, ensuring FERPA compliance and acting as a trusted firewall between schools and third-party AI. In addition, teacher and school information is never sent. You work anonymously in our system with AI.

- Cloud-Based Teacher Workspace – Each teacher has a secure, Google Docs-style hub for drafting, revising, sharing, and finalizing IEPs. No file juggling, seamless collaboration, and built-in versioning.

- Compliance Ready – With DPAs already in place across districts, adoption is seamless — no new legal work or setup required. Role-based access, audit trails, and oversight tools come standard.

Official CASE webinar with our CEO, Richard Capone, talking about how AI is positioned to change special education.

- Scalable & District-Ready – Built for both individual teachers and enterprise rollouts, with admin dashboards, usage analytics, customizable frameworks, and the ability to sync with state reporting systems or third-party SPED repositories.

- Built for Special Ed from the Ground Up – Support for multiple disability categories, state compliance models, service tracking, discrepancy calculators, and intervention alignment.

- Teacher Empowerment – AI is a partner, not a replacement. Teachers can understand, refine, and adjust drafts with full transparency and explainability — all while saving hours of work and reducing burnout.

See Our AI Assistants in Action

With our AI Assistants, educators can:

- Instantly generate draft PLAAFPs and supporting documentation

- Quickly identify skill gaps and next instructional steps

- Write SMART goals for students based on their data in your district’s format

- Review student diagnostic data in an easy-to-digest format

- Manage a document system storing draft to final working files for each student

- Save and use district-wide and teacher level prompts

Technical Excellence: Engineered for Precision

Before AI, we were already ahead — and we still are.

Our ecosystem was built with precision from day one, with native grade-equivalency scoring and data compatibility that others simply can’t match.

- Seamless System Compatibility: Our quizzes and baseline assessments share a unified data structure.

- Vertical Grade Alignment: Our scoring system clearly shows student progress across grades and subjects.

- Supporting Today’s Standards: We support initiatives like Mastery Checkpoints Program (MCP) and Google’s A2A (Assessment-to-Assignment), helping schools stay aligned with evolving best practices.

- Future-Proof Foundation: Our human-developed assessments ensure long-term reliability, while AI enhances usability.

Our Roadmap: The Future of Personalized AI Support

We’re not stopping here. We’re building a future where our trusted data continues to power increasingly intelligent AI assistance.

Here’s what has arrived! We completed all our 2025 tasks by mid-year:

- Data-first PLAAFPs and SMART Goals: Using anonymized samples of customer PLAAFPs, Smart Goals, and Impact Statements, we refine AI outputs to match district-preferred formats. We push in de-identified student present level data to automate the writing. Available Now!

- AI Prompt Storage: Custom prompt storage at the teacher or school/district level. Available Now!

- Custom GPT for any organization: We’ll modify and store a custom GPT for you. You can choose the model, system instructions, and more. Available Now!

- Documentation System: As teachers work with AI in the IEP documentation process, we store documents “AI-First Draft” to “Final” in our system streamlining workflows and logistics for teachers. No need to worry about files store on PCs or randomly in cloud folders. Available Now!

- Firewall Between You and the AI: Teachers, schools, and students are anonymous. Your teachers never communicate directly with AI. We use an anonymous pass-through system providing you with 100% protection and comfort. Available Now!

Click the document to the right to see our most up-to-date AI Roadmap document.